Screenshots

Real UI from the current implementation: chat, documents, settings and logs.

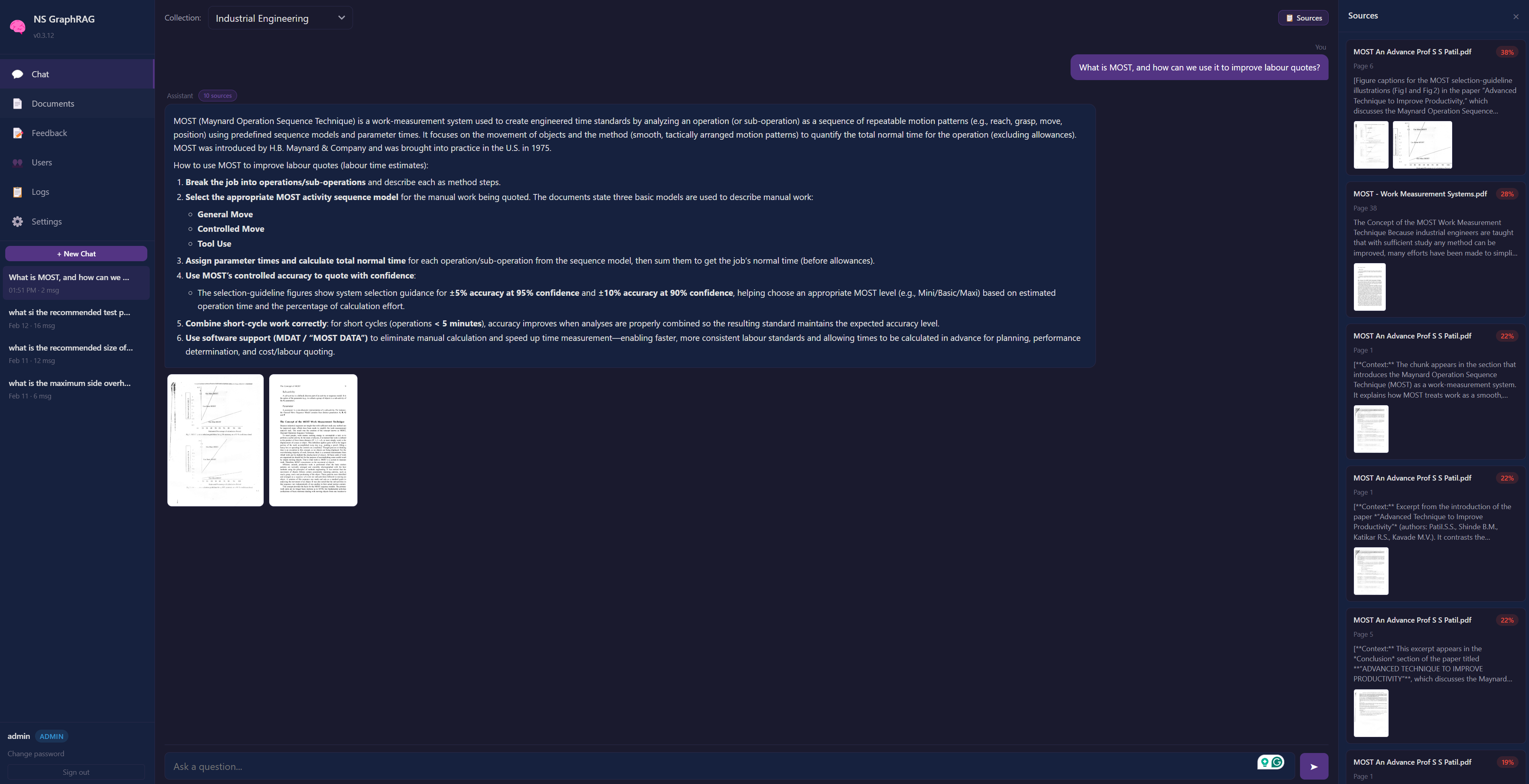

Chat

Streaming chat UI with conversation history and collection scoping.

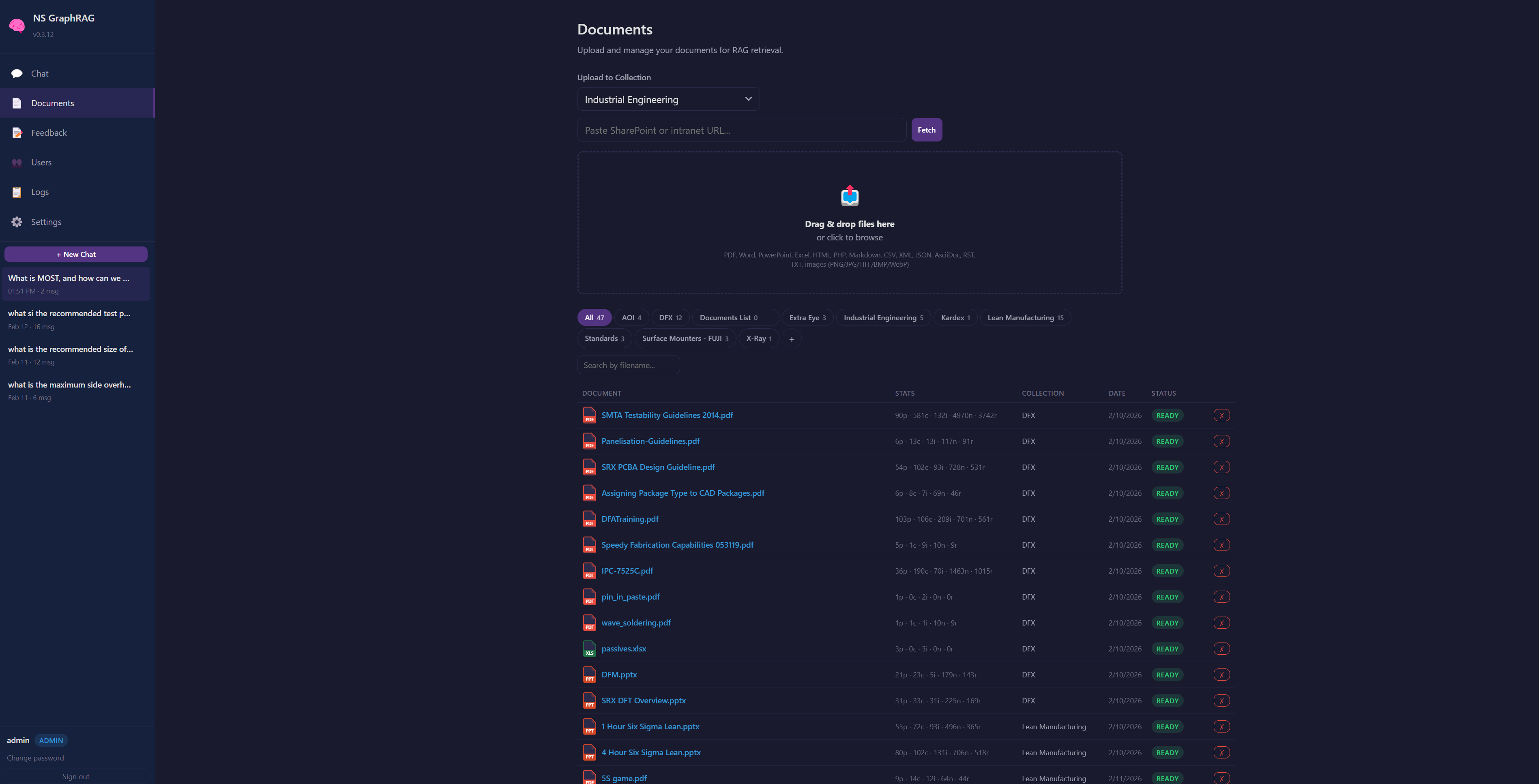

Documents

Document ingestion and management (uploads + URL ingest, pagination, collections).

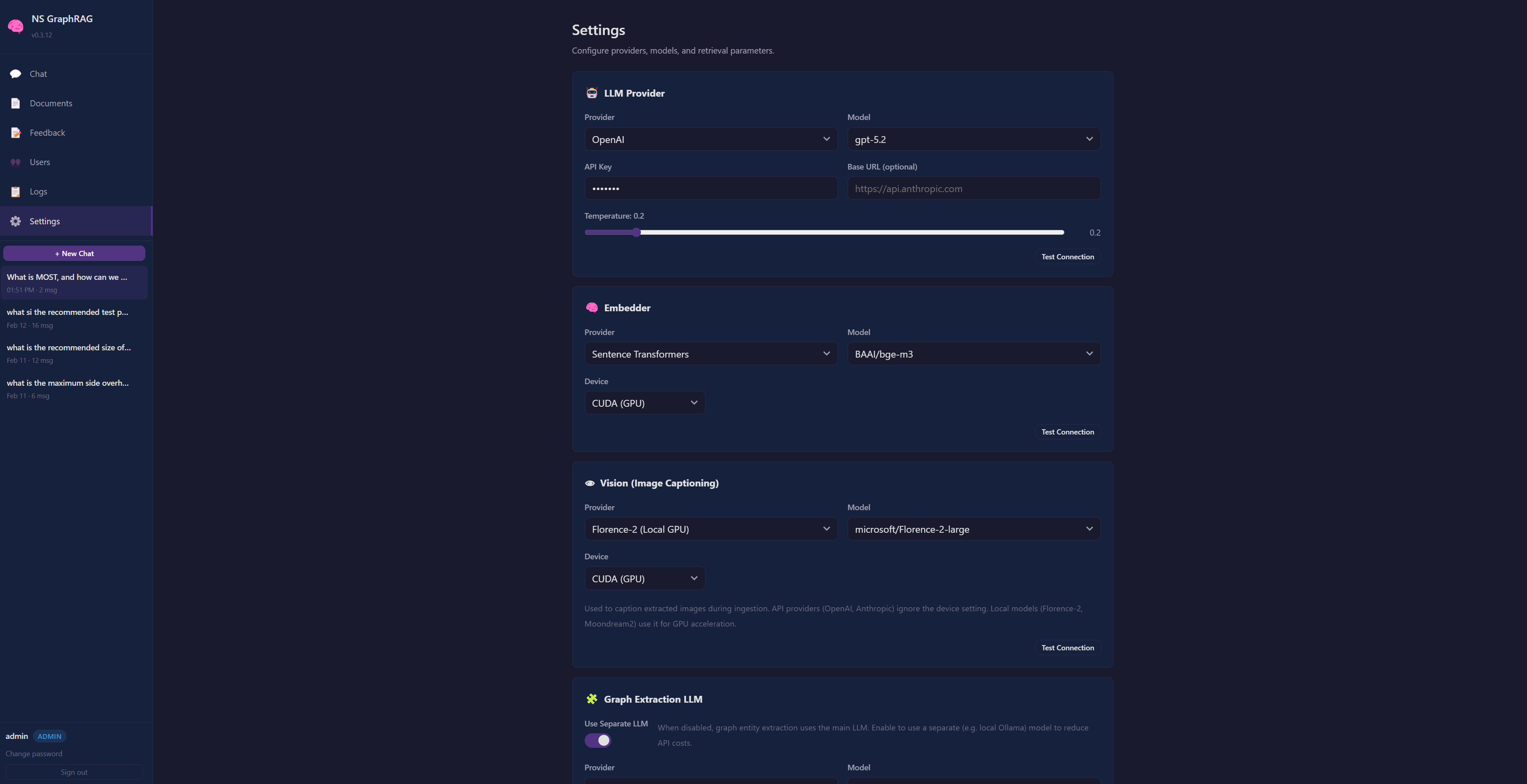

Settings

Admin settings with provider hot‑swap (LLM, embedder, vision) and connection tests.

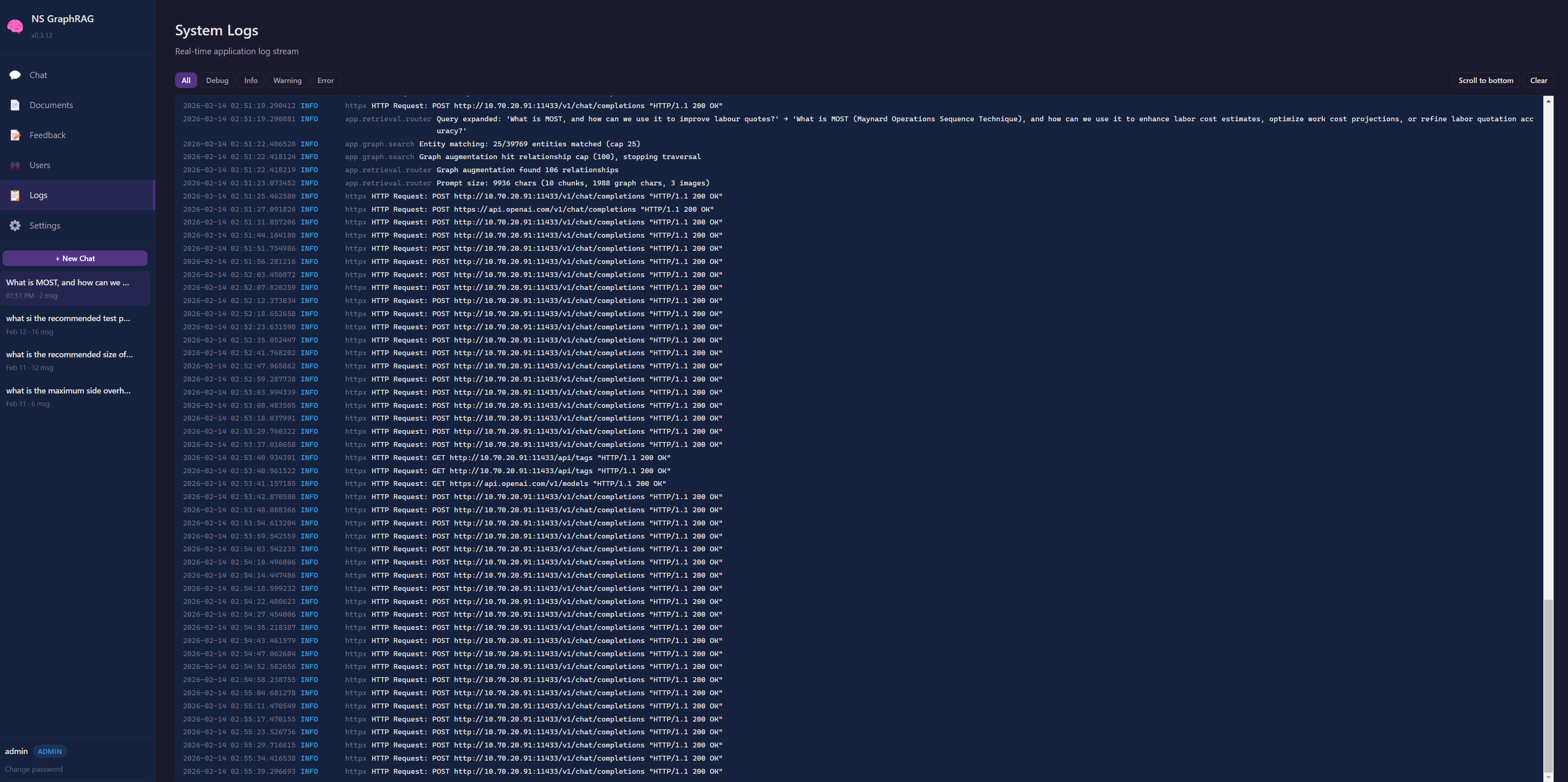

Logs

Built‑in log viewer for diagnostics and prompt/context sizing visibility.